Apple’s plan to fight child sexual abuse material has fueled privacy debate over the last few days. Why did the topic bring so much animosity? Was there a misunderstanding or does Apple really has an undesirable access to our photos? Let’s dive in.

New features brought confusion

Apple introduced two new features targeted at child protection on its devices which will arrive later this year with the implementation of iOS 15. One related to spotting and reporting child sexual abuse material, AKA CSAM, or child pornography. The second is related to spotting nudes in text messages sent to minors. Although the intentions of these features are acknowledged to be genuine, a lot of people got mad because of the seemingly controversial manner Apple was detecting this content and a suspicion that the firm was overstepping its bounds by scanning the contents of their phones.

How is Apple looking for child pornography on iPhones?

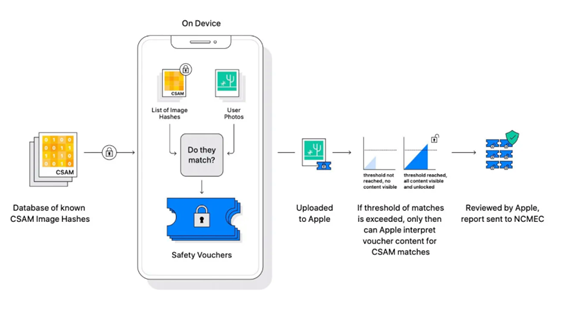

The National Center for Missing and Exploited Children, or NCMEC has a database of known CSAM (Child Sex Abuse Material). Apple’s new features aims to match images that correspond to CSAM. Here is how they plan to do it, in five steps:

- Images are uploaded to the iCloud Photo Library and as part of that upload pipeline, a neural hash is performed on the image. A neural hash is a detection system that creates cryptographic codes, or a string of numbers that identify the characteristics of an image.

- The device then compares the uploaded image hash to the CSAM hashes. At that point, your phone doesn’t know whether it matched anything and neither does Apple.

- The result of the comparison is then wrapped up in a safety voucher, and when the photo is stored, so is the safety voucher.

- The voucher is uploaded to the iCloud. If a threshold of 30 vouchers that correspond to illegal images are achieved, only then can Apple interpret voucher content for CSAM matches.

- The account will be flagged to Apple’s human moderators, who then review the vouchers and see if they actually contain illegal images. If they do, Apple reports the account to the proper authorities.

Figure 1: The matching process

What about the child safety measure?

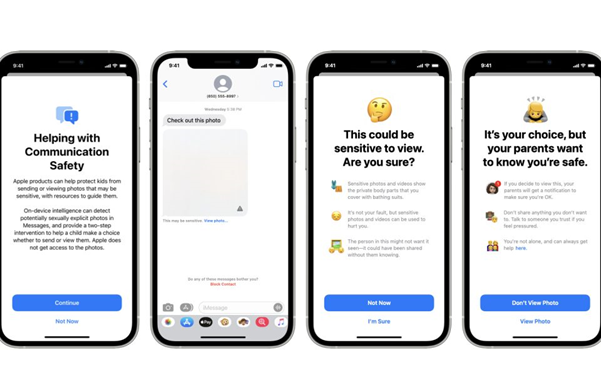

Apple is also adding an image scanning tool for minors in Messages, so that both children and parents can be informed of when they’re viewing explicit content. When sexually explicit imagery is shared, the functionality will notify parents, warning the minor not to view the content and informing the parent if the minor does. The image scanning algorithm was reportedly trained on pornography and can detect sexually explicit information, according to Apple. If the child is 12 years or under, parents must opt in to the communication safety function in Messages, and they will be warned if an explicit image is opened. When children aged 13 to 17 open an image, they will still receive a warning, but their parents will not be notified if the image is opened. Apple will compare unique image hashes to known CSAM image hashes in a method similar to the one described above.

Figure 2: Child safety new feature

Will Apple look at your photos?

These procedures have stirred up controversy around the right to privacy and people called on Apple to halt its moves. Indeed, the new features are considered invasive by privacy advocates, cybersecurity experts as well as customers in general. The cybersecurity director at the Electronic Frontier Foundation thinks they can be harmful, the head of WhatsApp also denounced the move saying it could enable governments to investigate people’s private phones.

Apple was not expecting such backlash and spent the last two weeks doing press briefings and communicating about the matter. Here are the concerns they addressed:

- Apple is not looking at photos themselves unless they are submitted for human review after fulfilling specific criteria.

- Only photographs uploaded to iCloud Photos will be scanned for CSAM, not those texted and received in the Messages app. Photos in Messages to Minors that use the safety function, however, will be scanned for sexual imagery.

- No CSAM not found in the database will be classified as such

- Apple will not learn anything about other data stored solely on device

- Apple has no intention of allowing governments or law enforcement agencies to take advantage of this image scanning tool

As of today, Apple has stated that the plans will proceed. The business, though, is in jeopardy. It has spent years working to make iPhones more secure, and as a result, privacy has become a key part of its selling pitch. But what was once being admired is now turning out to be bothering customers. People are still dubitative after Apple’s interventions and think there is no safe way to build such a system without compromising use security. Will Apple cave in under pressure? Only time will tell.